Why CrashLoopBackOff is a good thing?

Learn to love Kubernetes's most hated error.

Programming note: WhyThisK8s will be on break next week, back the week after that.

Most people know that Kubernetes is all about controllers and reconciliation loops. Or in plain English, when something goes wrong, Kubernetes tries to fix it automatically. You said you wanted one pod running, Kubernetes will damn well guarantee one pod is running. Various controllers will start, stop, and restart pods as necessary. The spec says one pod and there will be one pod!

This reconciliation has few limits. Status will equal spec in the end! If you enable cluster autoscaling, Kubernetes will even spin up virtual machines in the cloud, add nodes to the cluster, and make room for any poor pending pods.

The dangers of too much automation

In 1940, Disney released one of the first animated films ever: a wonderful film called Fantasia, that even in 1940 predicted the risks of too much automation.

Kubernetes is smarter than the broomstick in the above clip, because it doesn’t just push changes in a single direction. In Kubernetes, reconciliation loops constantly look at the current status, and then decide whether to add or remove a bucket of water running pod.

Still, like an engine working too hard, can Kubernetes overheat itself? Can a system work too hard at reconciliation? The answer is most certainly yes, and Kubernetes has built-in mechanisms to prevent that from happening.

What happens if you restart pods too much?

Imagine that someone rolls out a bad Deployment, and now every pod in that Deployment crashes non-stop. The code is rotten. There’s a bug and this pod is doomed to crash, forever and ever, until someone notices, checks what changed recently (ahem1) and fixes the Deployment.

Should Kubernetes restart that pod immediately whenever it crashes?

The answer is no! As Albert Einstein supposedly said, “Insanity is doing the same thing over and over and expecting different results.”

When it comes to crashing pods, constantly restarting them would put unnecessary stress on Kubelet, the APIServer, and any upstream services that the pod communicates with on startup. And for what benefit? The pod is going to crash anyway.

Of course, in many cases, the pod does not crash again. Maybe it crashed because it depends on an external service that was down. Wait a few minutes, try again, and now the pod will succeed if the external service is back up. But that’s exactly the point. In many cases, it’s beneficial to wait a little before retrying.

What does BackOff refer to in CrashLoopBackOff?

To avoid constant restarts of a failing pod, Kubernetes waits after each failure before restarting the pod. What’s more, the more times a pod fails in a row, the longer Kubernetes waits before the next attempt. As the docs say:

After containers in a Pod exit, the kubelet restarts them with an exponential back-off delay (10s, 20s, 40s, …), that is capped at five minutes. Once a container has executed for 10 minutes without any problems, the kubelet resets the restart backoff timer for that container.

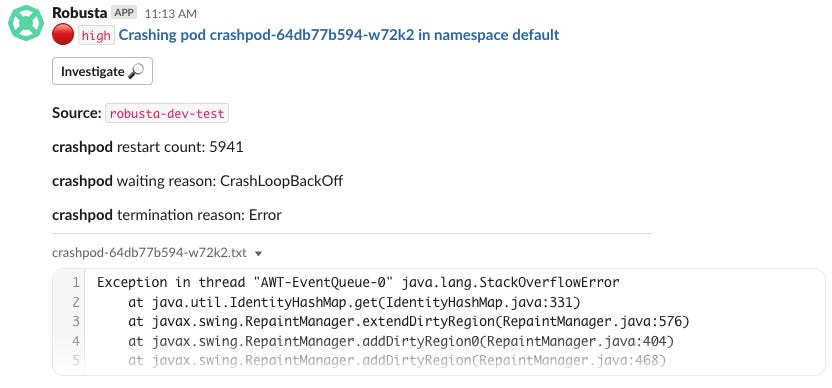

Let’s take an example from one of my own test clusters. I deployed a crashing pod during a long forgotten live stream. The pod crashes instantly on startup, managing only to puke the world’s longest Java exception.

This pod has crashed, 5941 times so far. Due to Kubernetes backoff feature, the pod does not crash more than once every 5 minutes. Math says that this pod has been crashing non-stop for 20 days.

Would we be better off without the backoff? Assume the pod crashes after 1 second, and Kubelet is actually capable of restarting the pod immediately. Without a CrashLoopBackOff, we’d have 1782300 crashes by now. We wouldn’t have that many Slack notifications, as every monitoring system worth it’s salt can dedupe noise from similar events. But monitoring aside, would we be better off with 1782300 crashes and not 5941?

Of course not! Crashing more times would only add unnecessary stress to the API Server for something that is going to fail anyway. And in cases when the pod eventually will succeed, it’s still better to take a deep breath, let the system stabilize, and then try again. No one benefits from non-stop obsessive attempts at doing the same thing again and again.

In short, hate the crash, love the backoff.